According to Roger Stanton, any information process has the ability to be defined on numerous levels. In this brief interview, Roger Stanton describes the tri-level hypothesis as one where artificial or mental information-processing events will be examined on at least three levels. As well, Roger Stanton explains how neuroscientist David Marr developed the tri-level hypothesis in 1982.

According to Roger Stanton, any information process has the ability to be defined on numerous levels. In this brief interview, Roger Stanton describes the tri-level hypothesis as one where artificial or mental information-processing events will be examined on at least three levels. As well, Roger Stanton explains how neuroscientist David Marr developed the tri-level hypothesis in 1982.

Interviewing Experts: What are the levels of Marr’s hypothesis?

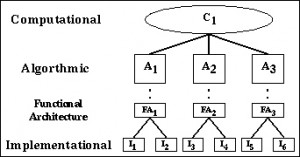

Roger Stanton: There are three levels: computational, algorithmic, and implementational.

Interviewing Experts: How is the computational level defined?

Roger Stanton: It is the most abstract of the three. In studying the computational level, a researcher will be concerned with two different tasks.

Interviewing Experts: What is the first task?

Roger Stanton: The first is a clear delineation of what the specific problem actually is. Breaking the problem down into its main parts usually offers some clarity into the situation.

Interviewing Experts: What do you mean by “a clear delineation of what the specific problem actually is?”

Roger Stanton: It means describing the problem in a precise way such that the problem can be investigated using formal methods. At this stage, determination of the problem is critical.

Interviewing Experts: So then, what is the second task?

Roger Stanton: The second task at the computational level concerns the specific reason or purpose of a particular process.

Interviewing Experts: How do researchers typically address this task?

Roger Stanton: As part of the second task, a researcher will ask, “Why is this process here in the first place? In this part of an analysis, researchers will focus on the idea of adaptiveness.

Interviewing Experts: And how would you further describe adaptiveness?

Roger Stanton: It’s the idea that human mental processes have evolved or have been learned in a way that enables us to solve the given problem.

Interviewing Experts: How does that happen?

Roger Stanton: Here, we must employ an algorithm, the second level of the hypothesis. It’s defined as a formal system that acts on informational representations.

Interviewing Experts: Can you explain that in layman’s terms?

Roger Stanton: Sure. Algorithms are essentially “actions” that have been used to change or manipulate representations. It can be thought of as a set of steps that always produce the same outcome.

Interviewing Experts: Are algorithms constant?

Roger Stanton: Algorithms are well-defined. A researcher will know precisely what occurs at each step, as well as how these steps affect the information. Using computers as an analogy, the algorithmic level is similar to software.

Interviewing Experts: How so?

Roger Stanton: Software will contain a set of instructions designed for data processing. Finally, the third level is implementational.

Interviewing Experts: What questions will be asked at this time?

Roger Stanton: Questions might be, “What is the information processor made of?” or, “What types of material or physical changes underlie the changes seen?” This level is sometimes referred to as the “hardware level.” That is because, in a sense, hardware describes the physical “stuff” of which a an information processing system is made of, whether it’s a human or a machine.

Interviewing Experts: And what is this “stuff”?

Roger Stanton: In humans it involves some explanation of the central and peripheral nervous systems. This might be an explanation at a more global level, like the areas of the brain, or an explanation at a more local level like the neural circuitry that is involved. In computers, however, it involves the computer’s various parts like the input device, and the hard drive. The hardware in machines contains circuits and the electron flow through these circuits. The hardware in animal or human cognition is the nervous system.

Interviewing Experts: Fascinating. Thank you for sharing with us.

Roger Stanton: You’re welcome!

Roger Stanton is a cognitive psychologist and his research focuses on elements of old-new recognition and categorization.

Speak Your Mind